Tuesday, 22 April 2025

09:54 AM

The human condition involves a fair bit of pain, so it's not surprising that Old English had a number of words for that unfortunate experience. Some, it turns out, are familiar to us even now. For example, one term was sār, which we know today as "sore, soreness". The Leechbook suggests that ...

Mugcwyrt ðæt sār ðara fōta of genimþ

("Mugwort the pain of the feet away takes")

In Beowulf (l. 975), there's a more vivid (indeed, poetic) description of how Grendel suffered:

ac hyne sār hafað

in nīð gripe nearwe befongen

balwon bendum

("but him pain has/in hostile-grip narrowly grasped/with baleful bonds")

Another OE word for pain was ece, which might initially look weird but is actually just "ache". Medieval medical texts were all over remedies for this:

Wiþ mūðes ece & wið tungan & wið þrotan genim fīfleafan wyrtwalan[1]

("For mouth pain or for tongue or for throat take root of cinquefoil")

Wiþ hēafod ece genim diles blōstman, sēoð on ele, smire þa þunwangan mid

("For head ache take dill flower, seethe it in oil, smear the temples with it")

One OE term for pain that seems to have been pretty common was wærc (sometimes wræc[2]). In the dictionary I use, I find these compounds, which frankly sound like a catalog of what it's like to get old, haha:

bānwræc: bone pain

brēostwærc: chest pain

cnēowærc: knee pain

ēagwræc: eye pain

endwærc: "pain in the anus", as it says in the dictionary

heafodwærc: head pain

heortwærc: heart pain

hypewærc: hip pain

inwræc: internal pain

liferwærc: liver pain

liþwærc: limb (joint) pain

sculdorwærc: shoulder pain

swēorawærc: neck pain

tōþwærc: tooth pain

þēohwræc: thigh pain

wambwærc: stomach pain

The word wærc survived into the early days of modern English as wark or sometimes warch ("Hee hath beene sore pained with great warch in his bones"), but has since fallen out of use. Fortunately (?), we were able to pick up the word pain from French, so we still have a full complement of words to describe our sufferings, whew. ;)

__________

[categories]

language

|

link

|

Thursday, 17 April 2025

06:48 PM

In my Beowulf class, we’re about two-thirds of the way through. In this week's reading, I learned in line 2085 that Grendel the monster had a bag[1] that was made with orþanc (#); this is glossed as "skill, invention".

The word orþanc made sense to me only after I learned that þanc (the origin of "thanks") meant "thought; favor" and that the or prefix had a cognate in the German Ur, meaning "original" or "proto". So orþanc = "proto-thought", i.e., invention or skill. This sort of thing tickles me.

The or prefix in OE has several meanings, but in this sense of "original/proto" it also shows up in oreald (Ur-old="very old, primeval"[2]) and orlæg (Ur-law="fate, destiny"). This sense of the or prefix stopped being productive in English — that is, we can't create new words with it. But oddly, we did borrow some words from German (mostly in academic contexts) that use the equivalent Ur prefix, as in Urtext.

And maybe the German borrowing is a tiny bit productive? I found cites with references to ur-American, to the Ur- model of the Leica M3 [camera] (#), and to an Ur- boutique hotel (#). On Facebook, the editor and linguist Jonathon Owen found a reference to an "ur-feedback loop".

I don't think this is first time that we lost something in English and then borrowed it back from another language. English has never been fussy about where it gets its words. ??

__________

[categories]

language

|

link

|

Friday, 4 April 2025

09:54 AM

Today I learned two interesting and related facts about memory usage on my computer. (2-3/4 facts, actually)

Fact #1: audio crackling. I use a music streaming service, and over the last few days, the audio had started crackling in an annoying way. I initially thought it might have been a cable issue, so I fooled around with all the physical connections to my Bose subwoofer and speakers. This sometimes seemed to work, sort of. But no, the issue was just intermittent.

From a Quora (!) post, I learned that the culprit could be that I was running tight on memory in the computer. I fired up ye olde Task Manager. Indeed, when I first started it up and when the audio crackling was most evident, I had less than 10% free memory of the 16GB of RAM that's in my laptop.

Time to close some apps! And indeed, closing apps did bring down memory use, which did in turn seem to smooth out the audio. This probably explained why the crackling was intermittent; it coincided with occasions when various apps were really pushing on my RAM limit.

Fact #2: sleeping/discarded browser tabs. A notable memory hog on my computer is Chrome; I have 20+ tabs open at any given time, some of which I use constantly. For example, there's a cluster of tabs that I continually switch between when I'm doing my Old English homework.

I (reluctantly) closed a few tabs, and it did seem to reduce memory use; each tab I closed freed up more RAM. But I didn't want to close all the tabs — these are my emotional-support tabs. haha

However! It turns out that you can put Chrome tabs to sleep, so to speak. (Technically, for Edge the term is "sleep"; Chrome uses the term "discard".) When a tab is asleep/discarded, you still see the tab in the browser window, but it's a kind of placeholder; the content of that tab has been flushed out of memory. When you go back to the tab, the browser reloads the page. Each tab I put to sleep/discarded freed about 2% of memory.

Fact #2-1/2. From this exercise, I also learned about a UI thing I'd noticed. When a browser tab in Chrome is asleep/discarded, sometimes there's a gray dotted circle around the tab name:

Fact #2-3/4. By the way, to discard Chrome tabs, go to chrome://discards and use the links at the right side of the page to discard or load a tab.

[categories]

technology

|

link

|

Wednesday, 4 September 2024

08:04 AM

This week I start an Old English class in which we begin our reading of Beowulf. I believe that the arc for most (all?) Old English courses is to start with the basics, work one's way through shorter pieces, and culminate with reading the big B.

This week's assignment is to read the first 79 lines, in which Hrothgar has the great hall Heorot built. (Soon, as many of us know, it will be visited by Grendel ...) This week's assignment is to read the first 79 lines, in which Hrothgar has the great hall Heorot built. (Soon, as many of us know, it will be visited by Grendel ...)

I had to think for a bit about whether I even wanted to take this Beowulf class. Back in 1980, I took the Old English series at the University of Washington. That consisted of two parts: part 1 was grammar and shorter readings, part 2 was Beowulf, one semester (quarter?) each. As I've been taking OE classes again over the last couple of years, I marvel at how rapidly we must have worked our way through the readings. I absolutely could not keep that pace up now in my dotage.

The experience back in the day also must have traumatized me a little, because ever since I restarted Old English classes, I've been stressed about the prospect of revisiting Beowulf. I expressed this hesitancy to our instructor, who has assured us multiple times that the readings we've done so far — including "The Battle of Maldon", "The Wanderer", "The Seafarer", and the horrible (to me) "Andreas" poem — have prepared us well for tackling Beowulf.

But I also had to stop and think about a question that several people have asked me: why am I taking Old English classes at all? The answer at first was easy — because it's fun! I've had enough German to recognize and be delighted by the resemblance between OE and German, not to mention the joy of finding ancestral and fossil words of modern English.[1]

The text for our first year of OE was written by our instructor: a book about Osweald the Bear, a talking bear who has adventures in Anglo-Saxon England at a particularly interesting time in history. The text starts simple and becomes increasingly sophisticated, at times including snippets of "real" OE literature. It was a blast to read and discuss, with all of us keenly invested in what happens to Osweald and his friends. The text for our first year of OE was written by our instructor: a book about Osweald the Bear, a talking bear who has adventures in Anglo-Saxon England at a particularly interesting time in history. The text starts simple and becomes increasingly sophisticated, at times including snippets of "real" OE literature. It was a blast to read and discuss, with all of us keenly invested in what happens to Osweald and his friends.

After that we turned to existing literature, including bits from the Anglo-Saxon Chronicle, gospel translations, and various poems. These were more challenging, of course. That's especially true for the poems, which are syntactically complex — the subject of a sentence might be several lines after the verb and object(s), for example.

Plus poems rely on a broad vocabulary. As I heard somewhere, the alliteration used in Old English poems means poets needed a selection of synonyms that all started with different sounds. Thus, for example, the poem about the battle of Maldon has about two dozen words for "warrior", basically.[2] Until one has mastered all of this vocab (not so far, me), it can be a bit of a slog to stop and look up yet another unfamiliar word.

As the readings got harder and it took longer to get through them, I had to ask myself again whether I was having fun — or enough fun to continue. Did I actually want to read Beowulf?

I know from talking to some of the other students that many of them came to Old English through Tolkien and The Lord of the Rings. There are discussions sometimes in class about how Tolkien borrowed elements of Old English literature for his works. But I'm not a Tolkien guy (never read any of the books), so I do not get the pleasure of appreciating how the literature we're reading in class was echoed in LOTR.[3] I know from talking to some of the other students that many of them came to Old English through Tolkien and The Lord of the Rings. There are discussions sometimes in class about how Tolkien borrowed elements of Old English literature for his works. But I'm not a Tolkien guy (never read any of the books), so I do not get the pleasure of appreciating how the literature we're reading in class was echoed in LOTR.[3]

The joy of discovering correspondences and fossil vocabulary has faded a bit as I've gotten more used to Old English. I do still find pleasing word bits, though, like this from "The Wanderer":

Gemon hē selesecgas ond sincþege,

hū hine on geoguðe his goldwine

wenede tō wiste.

He remembered the hall warriors and treasure-receiving

How in his youth his gold-friend [lord]

Accustomed him to feasting.

... and learning that wenian ("accustom") is the source of our verb to wean [from].

But an unanticipated joy (unanticipated by me, that is) is that I have come to like some of the poetry a great deal. I've discovered a fondness for the fatalistic Saxon outlook on life, as well as for their pithy and wry observations. For example, the narrator of "The Wanderer", a poem in the persona of someone treading the paths of exile, makes this relatable observation:

… ne mæg weorþan wīs wer, ǣr hē āge

wintra dǣl in woruldrīce.

A man cannot become wise before he's had a share of winters in this world.

And there's this ubi sunt lamentation from later in the poem:

Hwǣr cwōm mearg? Hwǣr cwōm mago? Hwǣr cwōm māþþumgyfa?

Hwǣr cwōm symbla gesetu? Hwǣr sindon seledrēamas?

What's become of the horse?

What's become of the young warrior?

What's become of the treasure-giver?

What's become of the seats at the feast?

Where are the hall-joys?[4]

I was surprised at how much I enjoyed "The Battle of Maldon", a poem about how a troop of Saxon militia lost a battle against marauding Vikings, apparently recording a real incident. The poem is practically cinematic; you could make a movie of it today and it would hit all the tropes we're used to — the old commander rallying his inexperienced troops; the young man who sends away his beloved hunting falcon and turns to join the ranks; the ravens circling in anticipation; the old warrior who gets wounded but kills his attacker; the cowardly brothers who turn and run; the sad ends of individual courageous fighters.

And now I've started Beowulf again. The poem begins with a history of Scyld Scefing, a foundling who …

... sceaþena þrēatum,

monegum mǣgþum, meodosetla oftēah,

egsode eorlas …

oð þæt him ǣghwylc þāra ymbsittendra

ofer hronrāde hȳran scolde

gomban gyldan

... from troops of enemies,

from many nations, captured their mead-benches,

(and) terrified rulers …

until him each of the surrounding nations

over the seas [whale-road] had to obey

(and) to yield tribute

To which the poet adds this conclusion:

þæt wæs gōd cyning

That was a good king!

You can imagine a hall full of Saxons having a good whoop about that.

I don't know if I'll make it through all 3182 lines of the poem, but it seems like it has a promising start.

__________

[categories]

personal, language, readings

|

link

|

Sunday, 2 June 2024

08:46 PM

This is mostly a note to myself so that I don't have to look this up the next time I have to do this. The task is to have a quick way to get directly to the Customize Keyboard dialog box in Microsoft Word:

You use this dialog box to assign new (custom) keyboard shortcuts to Word commands or to macros. The usual way to get to this dialog is through the File menu:

- Choose File > Options.

- Click the Customize Ribbon tab.

- Click the Customize button next to Keyboard shortcuts.

This works but is tedious. Instead, you can assign a keyboard shortcut to get directly to this dialog. The trick is to use the Customize Keyboard dialog to assign a shortcut to the ToolsCustomizeKeyboard command:

Don't forget to click the Assign button!

Credits: I learned all of this from Stefan Blom's answer on a thread on the Microsoft Community site.

[categories]

MS Word

|

link

|

Friday, 31 May 2024

12:04 PM

The surest way to cast a critical eye on the amount of stuff that you have is to prepare for moving. The prospect of boxing up all that stuff and hauling it and finding a place to put it at the new domicile can lead a person to wonder why they even need all that. This is particularly true when you're downsizing from, say, a four-bedroom house with a double garage to, say, a two-bedroom condo with a single outdoor parking space.

We did this some years ago, which resulted in a huge yard sale and a sometimes-painful culling of all our stuff, especially books. But we did it, and in our little place we're a wee bit tight, but we manage.[1]

Chapter 2. Over this last winter, we spent about six months in San Francisco for my wife's work. During that time we lived in rentals. When we drove down, we took what we needed in one large suitcase and two small ones.[2] We bore in mind the sensible travel advice that you don't have to bring everything with you — your destination has drugstores and clothing stores (and for me, hardware stores, ha).[3] So while we were in SF, we made trips to Target and Daiso and other locales where we could fill in any missing stuff.

This worked well. Our rentals had laundry facilities, of course, so I was able to cycle through my limited wardrobe. And we were indeed able to get the additional things we needed — my wife needed some work clothes, for example. This worked well. Our rentals had laundry facilities, of course, so I was able to cycle through my limited wardrobe. And we were indeed able to get the additional things we needed — my wife needed some work clothes, for example.

But we bore in mind that these acquisitions were either temporary (to be left behind at the rentals or donated) or we'd have to haul them back with us. This mentality helped frame the question of whether to buy stuff: for everything that we acquired, what were we going to do with it when our stay in SF ended?

(One place where this kind of failed was with books — between visiting a gajillion bookstores and going to weekly library book sales, we acquired more books than we probably should have. I ended up shipping books back home via the mail before we left.)

Chapter 3. Back in Seattle! After unpacking the suitcases, I went to put away the wardrobe that had served me well during our extended leave. But when I opened my dresser drawers, I was kind of shocked: I have so much stuff. I had been living with a collection of, like, 9 or 10 shirts. But at home I have two full drawers full of neatly folded t-shirts. Why? Why would I need a month's worth of t-shirts? I had the same experience in my closet — why do I need all these shirts/pants/sweatshirts/coats?

Well, I don't.

So another culling has begun. These days, when I pull out a shirt, I might look at it, and say yeah, no, you go in the Goodwill pile. This process is not as concentrated as if we were moving again, but it's steady and I'm determined to keep at it until I'm down to a collection of clothing that I actually use. Ditto kitchen stuff, linens, office supplies, leftover project hardware, and all the other stuff that just accumulates. (Books, mmm, that one's hard.)

Taking a break from our stuff, and living adequately with less of it, has really helped us look at it again with a bit of a critical eye. I suppose "Do I really need this?" isn't exactly a question about "sparking joy", but I hope it will be just as effective.

__________

[categories]

personal, general, travel

|

link

|

Thursday, 30 November 2023

10:34 PM

Recently I was at a grocery store in our neighborhood that still does a lot of business in cash. As I was waiting, I watched the cashier ring up the customer in front of me. The cashier deftly took the customer’s bill—a $50, I think—and counted out change. When it was my turn, I said “It looks like you’ve been doing this for a while”. “Oh, yes”, she said. “35 years”.

During my college years (1970s), I had a kind of gap year during which I worked for Sears, the once-huge department store. I was hired as a cashier, and the company put us through an extensive training program for the position.

For example, that’s where I learned something that I saw the grocery-store cashier do: when you accept a big bill from a customer, you don’t just stuff it into the tray. You lay it across the tray while you make change. That way, if you make change for a fifty but the customer says, “I gave you a hundred”, you can point at the bill and show that that’s what they’d given you.

Before my timeMy cashiering days were at the beginning of the era of computerized cash registers (no big old NCR ka-ching machine for us). Although our register could calculate change, they still taught us how to count out change manually. (One method is to count up from the purchase price to the amount the customer gave you, as you can see in a video.)

We also learned to handle checks, which included phoning to get an approval code for checks over a certain amount. We learned to test American Express travelers checks to see if they were authentic. We learned to handle credit cards, which we processed using a manual imprinting machine that produced a carbon copy of the transaction.

We also learned to be on guard for various scams, such as the quick-change scams that try to confuse the cashier, as you can see in action in the movie Paper Moon.

There was of course lots more—cashing out the register, doing the weekly “hit report” of mis-entered product codes or prices (this was also before UPC scanners), and many other skills associated with being at the point of sale and handling money.

During my son’s college years (late 2000-oughts), he also worked as a cashier, in his case for Target and for Safeway. I quizzed him about his training. He remembered that the loss-prevention people at Target warned them about the quick-change scam, and it even happened to him once while he worked at Safeway.

But he doesn’t remember much training about how to handle change; at a lot of places, coin change is automatically dispensed by machine into a little cup. He does remember learning how to handle checks. But he doesn’t remember much training about how to handle change; at a lot of places, coin change is automatically dispensed by machine into a little cup. He does remember learning how to handle checks.

Most of their training, he remembers, was about how to use the POS terminal[1]—how to log in, how to enter or back out transactions, etc. A POS terminal is, after all, a computer—they were being trained in how to manipulate the computer, and less so than in my day about how to handle cash money.

Shortly after I had my grocery-store experience with the experienced cashier, I was in line at a local coffee shop. The customer ahead of me paid in cash. When I got to the counter, I asked the amenable counter person, “This is going to be a sort of weird question, but how much training did they give you in how to handle cash?” “Little to none”, was her report.

And Friend Alan recounted this experience recently on Facebook about a cashier trying to figure out how to even take cash:

It might seem like old-school cashiering skills are becoming anachronistic. It’s not unusual in my experience that small shops and pop-up vendors only take cards, using something like Square. Frankly, I was surprised that the coffee shop with the informative counter person even took cash. It might seem like old-school cashiering skills are becoming anachronistic. It’s not unusual in my experience that small shops and pop-up vendors only take cards, using something like Square. Frankly, I was surprised that the coffee shop with the informative counter person even took cash.

Moreover, we customers are more and more becoming our own cashiers. Many stores nudge customers toward self-checkout kiosks, in part by reducing the number of cashiers so that it’s faster to use the self-checkout.[2] The logical conclusion to this effort is the cashierless store (aka “Just walk out store”), where computers just sense your purchases and charge you.

Still, it’s not like cash is going to go away. Even in the age of debit cards and Venmo, people will still want to use cash for various private transactions.[3] Moreover, not everyone has a bank account, or wants one. Although individual merchants might decide to go card-only, many will still find it to their benefit to take cash.

That probably will continue to include the grocery store where I met the experienced cashier. For the sake of that store, I hope that she is able to pass her experience and training on to other cashiers who work there.

__________

[categories]

personal, general, technology

|

link

|

Friday, 17 November 2023

11:49 AM

We’re staying in San Francisco for a few months, so we’re in a rental. It’s nice enough, but there are little things here and there that feel like they could be improved. (For example, no rental I’ve ever been in has had enough lighting.) One such thing in this rental is the kitchen faucet:

I hand-wash dishes, so I like to have a sprayer attachment for the kitchen faucet. I reckoned that hey, they’re not expensive, I’ll just get one and attach it myself. I hand-wash dishes, so I like to have a sprayer attachment for the kitchen faucet. I reckoned that hey, they’re not expensive, I’ll just get one and attach it myself.

Well, not quite. I took the aerator off the existing faucet and went to a plumbing supply place, where I explained my quest to the woman. She got out one of those gauges that they use to determine size and thread count, but to her surprise, mine didn’t fit any of them.

Hmm. We went to find another guy who tried the same thing. At that point I mentioned that it’s possible that this faucet is from IKEA. Ah. “My condolences,” he said, adding that he couldn’t help me with metric sizes and threading.[1]

Being an old guy, I remembered that when I was a kid, we used to have a little shower-head-looking attachment for our kitchen sink. It just slipped over the end of the faucet. Did he have any of those? He did know what I was talking about but told me that those were long gone.

Well, not quite. I went online, and dang, there was the very thing I’d remembered:

Not only was the device still available, the web even told me that it was in stock at a hardware store within walking distance.[2] Price: $4.99.

And so I went to Cole Hardware off Market Street. The outside looked unpromising, but they’d somehow crammed a complete, old-school hardware store into a space that’s the size of a bodega—two floors’ worth.

I wandered up and down the tightly-packed aisles till I found the plumbing stuff. I had to get down on the floor and root around in the back, but sure enough, there it was: the Slip-On Wide Sprayrator (“For mobile home kitchen sinks,” wut). To my professional amusement, the instructions for installing it are wrong—they show you how to screw it on, whereas the entire point is that you don’t:

Whatever. I had to enlarge the hole a little bit, but it did in fact slip on, and I now can enjoy the benefits of a sprayer while I wash the dishes:

I enjoyed the entire experience so much—success in finding a nearly ancient piece of plumbing technology, plus my visit to Cole Hardware—that I got myself a hat that features a skyline made of tools:

At this point, it would probably be wise of me not to study our rental apartment too closely. As much as I’m now feeling empowered, I should probably rein in any further urges to improve the place.

[categories]

personal, house, travel

|

link

|

Tuesday, 14 November 2023

02:12 PM

After I published my book, I was chatting with fellow word enthusiast Tim Stewart, who was one of the first readers. At one point he noted that there are a lot of references to various religious texts, and he gently asked “Do you have a background in Christianity?”

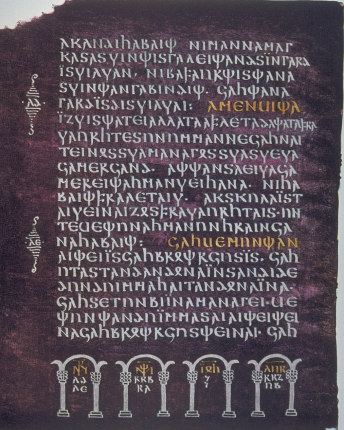

It was a keen observation. Per a casual count, I mention or cite the Bible about ten times, and I have references to the Lindisfarne Gospel, the Book of Common Prayer, and the Dead Sea Scrolls. I also have a reference to the Quran.

I can see why someone might conclude that I might have studied these texts in a religious context. But no. I was raised outside of any faith. In fact, because I didn’t go to Sunday school (or equivalent) and hadn’t read any religious texts growing up, I’ve often thought that I’ve been at a disadvantage when it comes to Biblical knowledge.

I apparently recognized this early on. When I was in high school, I took a class that was named something like “The Bible as Literature.”[1] I don’t remember much about that class, other than that I did a presentation on representations of the Madonna in art—something that seems like the type of knowledge one can have without having any religious motivation. I apparently recognized this early on. When I was in high school, I took a class that was named something like “The Bible as Literature.”[1] I don’t remember much about that class, other than that I did a presentation on representations of the Madonna in art—something that seems like the type of knowledge one can have without having any religious motivation.

Such Biblical learning as I have came about more indirectly, when I got to university and started studying old languages. Literacy, hence written materials, for old languages often coincided with the Christianization of the people who spoke (wrote) those languages. A particularly salient case (one that I wrote about elsewhere) is that of the Gothic language: effectively, the only real text in that defunct language consists of fragments of a Bible translation.

In fact, it was a class in Gothic that sort of kickstarted my Bible-reading. Most old-language classes consist of studying grammar and then reading texts. Exams in those classes then usually consist of being given a passage and having to translate it. In fact, it was a class in Gothic that sort of kickstarted my Bible-reading. Most old-language classes consist of studying grammar and then reading texts. Exams in those classes then usually consist of being given a passage and having to translate it.

I remember our first midterm exam in Gothic. The prof handed out the text to translate, and someone in class muttered (or perhaps even shouted) "The Parable of the Talents!" It clearly was helpful to that student to know the outlines of the text already. But because I was so ill-read in the Bible, this clue did me no good at all.

This was decades before the internet, so we had to look everything up in books. Consequently, the next day I went to the university bookstore and got myself a pocket edition of the King James Version (KJV) of the Bible, which I carried around in my backpack for the next couple of years.

There are of course many translations of the Bible into English, but the KJV was useful to me for two reasons. One was simply that I had a new(-ish) English version of things like the Parable of the Talents in English. But it was also useful because its antique-ier grammar often reflected more closely the grammar of the dead-language texts that we were reading.

As I’ve mentioned elsewhere, I’m taking a class in Old English right now, and it has again been helpful to have some knowledge of the Bible. Although there is a pretty good corpus of Anglo-Saxon writing, there’s still a lot of Biblical themes. In fact, in our class I’ve already run across my old friend the Parable of the Talents, and I’ve also encountered the Old English versions of the Parable of the Ten Virgins and the Parable of the Weeds, along with a couple of other glancing references to the New Testament. In each case, I’ve found the relevant passage in the KJV (online these days) to help me decode what I’m reading.[2]

![Medieval-looking painting of the 10 virgins from the parable. In Old English: 'Syllaþ us of eowrum ele; for þam ure leohtfatu sind acwencte.' [KJV: Give us of your oil; for our lamps are gone out.]](https://www.mikepope.com/blog/images/BibleStudiesTenVirgins.png) But the content and the language of the KJV has proved helpful not just when I want to study old languages. At one point in my education, I remember hearing that it’s not possible to read Milton without having a substantive knowledge of the Bible. I don’t particularly yearn to read Milton, but it’s true that stories from the Bible infuse our literature. So my instinct in high school to take a class in Bible as literature was a good one.

And there’s more. To borrow an idea, see if you know the source of these well-known phrases:

He gave up the ghost

There is no new thing under the sun

A man after his own heart

Don’t cast your pearls before swine

The writing on the wall

Many are called, but few are chosen

Since I’ve loaded the dice here, you won’t be surprised to learn that these are all from the Bible, specifically from the KJV.

The writer Cullen Murphy performed an exercise once in which he took these phrases and several more and sent associates out into the streets to ask people where the phrases were from. People knew the phrases, but they frequently didn’t know the source, although he got a lot of amusingly incorrect guesses.

The language of the KJV has resonated with English speakers for centuries, obviously, as evidenced by how much of it we’ve incorporated into our daily speech. Dozens (hundreds?) of phrases we use all the time were crafted by “certain learned men”[3] in the creation of what in 1611 was known as the Authorized Version.

Tim Stewart, my correspondent in this discussion about the Bible, put it all this way:

On one level [the KJV] is a religious text, of course, but it's also a cultural touchstone. As random data points, in both the movies Day After Tomorrow (2004) and V for Vendetta (2005), there are nonreligious characters who have short scenes where they appreciate and nearly reverence the King James as a piece of literature and an artifact of human civilization. The King James Bible continues to attract interest and spark joy not merely for its religious content but for its enduring textual and linguistic beauty, not even to mention the way its phrases and its phraseology have insinuated themselves into 400 years of English-language literature.

As I say, Tim’s question about my faith was not an unreasonable one—as I glance through my book, it does at times seem to be a bit heavy on the citations from religious sources. But the answer really is that my dabbling in old languages has inevitably familiarized me with various religiously themed texts.

And more generally, of course, I'm an English speaker. So I’m heir to the richness that the KJV and the Bible in general has contributed to our language and culture.

__________

[categories]

personal, language

|

link

|

Wednesday, 4 October 2023

09:46 AM

Today is my first day of retirement. I’ve e-signed all the paperwork; there was a (virtual) going-away party; my computer no longer accepts my work login. I spent September “on vacation” with the idea that my last day would be at the beginning of October so as to extend my healthcare coverage another month.[1] And so it has come to pass.

Grad school, 1980 Grad school, 1980I spent just over 41 years in the tech industry, which was sort of accidental. I had moved to Seattle in 1979 to go to grad school at UW. At the end of my first year, one of my fellow students asked “Hey, do you need a summer job?” And so I ended up working for a company that provided litigation support: outsourced computer-based facilities for law firms. For us, this mostly consisted of reading and “coding” documents (onto paper forms) for things that the lawyers were looking for.

The woman who ran the company had an interesting approach to hiring the minions for this job: she hired only graduate students, it didn’t matter what major. Her theory was that graduate students had shown that they knew how to read carefully and critically. We read depositions, real-estate sales documents, all sorts of stuff. Most of it wasn’t that interesting, and our role was very specific, namely to look for keywords or particular numbers or other small details. As it happens, this was good training for my later career, though of course I didn’t know it then.

When my TA position ran out at UW, I went to work full time for that same company. The timing was fortunate. The IBM PC had just come out, and law firms were interested in what they could do with this new device. Our company quickly created a couple of document-management products, and I ended up doing support and training and even some programming. When my TA position ran out at UW, I went to work full time for that same company. The timing was fortunate. The IBM PC had just come out, and law firms were interested in what they could do with this new device. Our company quickly created a couple of document-management products, and I ended up doing support and training and even some programming.

From there, my career was similar to that of many others. I moved from this startup to another to another. One of the companies afforded me and the family an opportunity to spend a couple of years in the UK.

With my daughter at Stonehenge in 1990

With my daughter at Stonehenge in 1990

At some point I moved from being a kind of roving generalist into being a documentation writer. This was back when we still included printed documentation with a product and had to worry about page counts and lead time for printers and bluelines.

In 1992 I got a job with one of Paul Allen’s software companies (Asymetrix), and when that company started teetering, I moved to Microsoft.

I spent 17 years there in all. I had a brief period in Microsoft BoB, a product that was mocked out of the marketplace (but that imo had a lot of very good ideas behind it). I spent the rest of my Microsoft career in the Developer Division, working on a bunch of products that had the word “Visual” in their name: Visual Foxpro, Visual Interdev, Visual Basic, Visual Studio. I spent 17 years there in all. I had a brief period in Microsoft BoB, a product that was mocked out of the marketplace (but that imo had a lot of very good ideas behind it). I spent the rest of my Microsoft career in the Developer Division, working on a bunch of products that had the word “Visual” in their name: Visual Foxpro, Visual Interdev, Visual Basic, Visual Studio.

About halfway through my Microsoft stint, one of the editors retired, and I made a pitch to the manager of the editing team that she should bring me on as an editor. She took this chance, and so I embarked on a formal career as an editor; I alternated between writing and editing for the rest of my time.

When Microsoft had exhausted me, I followed some former colleagues to Amazon as a writer in AWS IAM, a cloud authentication/authorization technology. Unlike some people, I had a good experience at Amazon. Even so, when I was offered a chance to work as a writer at Tableau, I took that. And from there I again followed more colleagues and moved to Google, where I spent just over 6 years editing solutions and related materials, and trying to figure out how to leverage the work of about a dozen editors across a team of hundreds of writers.

Blah-blah. :)

I appreciate that there was a lot of luck in my career. I was in the right place (Seattle) at the right time (start of the PC industry). I had just enough background, namely a smattering of experiences with FORTRAN and BASIC. Opportunities came up at the right time (a summer job, a retiring editor). People repeatedly gave me chances or offered opportunities or made career-changing suggestions. My friend Robert (also a writer/editor) and I have over the years kept coming back to this theme: how just plain fortunate we've been in our careers.

Portrait by friend Robert

Portrait by friend RobertI have no regrets about my career. Way back when I was an undergraduate and taking a class in BASIC, the prof casually asked me whether I’d considered a computer science major. Oh, no, was my answer—my math debt was so vast that I would have spent years of coursework just to meet the math prerequisites. And so I pursued the humanities and am very happy to have been able to do so, and I like to think that I was able to blend what I learned in school with what I learned on the job.

The tech industry has its issues, no doubt. But for me, at least, it also offered an opportunity to work with many, many smart people who were doing interesting things at world-class companies. Above all—and this is a cliché, but it’s still true—I’m going to miss working with the people.

People ask what I’m going to do in retirement. I kind of kid when I tell people that the first thing I’m going to do is learn to not work. But there’s a grain of truth there. I have many interests, but which ones I’ll really get into, and whether there are other, new interests that I’ll develop, remains to be seen.

Retired and traveling

Retired and traveling

[categories]

personal

|

link

|